What is Big-O Complexity Analysis

In this lesson, you will gain knowledge about algorithm complexity analysis and the various types of big-O complexity analysis.

Table of Contents

Introduction to complexity analysis

Complexity analysis is a crucial aspect of algorithm design and analysis. It involves evaluating the efficiency and resource usage of algorithms.

By understanding complexity analysis, programmers can assess the performance of their code, identify bottlenecks, and make informed decisions to optimize algorithms for faster and more efficient execution.

It is common for developers to overlook the complexity of their written code. Just because your computer executes the code doesn't mean it is scalable (scalability means that an algorithm scales when the input grows large).

In writing optimal code for a given problem, you need to consider both the time and space it takes to run the algorithm.

Ok, what does this have to do with data structures?

Choosing the right data structure for an algorithm is essential because it directly impacts the algorithm's efficiency, performance, and scalability. Here are a few reasons why selecting the appropriate data structure is crucial:

- Efficiency: Different data structures have varying time and space complexity for performing operations like insertion, deletion, search, and traversal. Choosing the most efficient data structure for the specific requirements of the algorithm can significantly improve its execution time and resource utilization.

- Functionality: Each data structure is designed to serve specific purposes and provide different functionalities. For instance, arrays are suitable for random access, linked lists excel at efficient insertion and deletion, and trees are ideal for hierarchical structures. Matching the algorithm's needs with the functionality of a suitable data structure ensures optimal implementation.

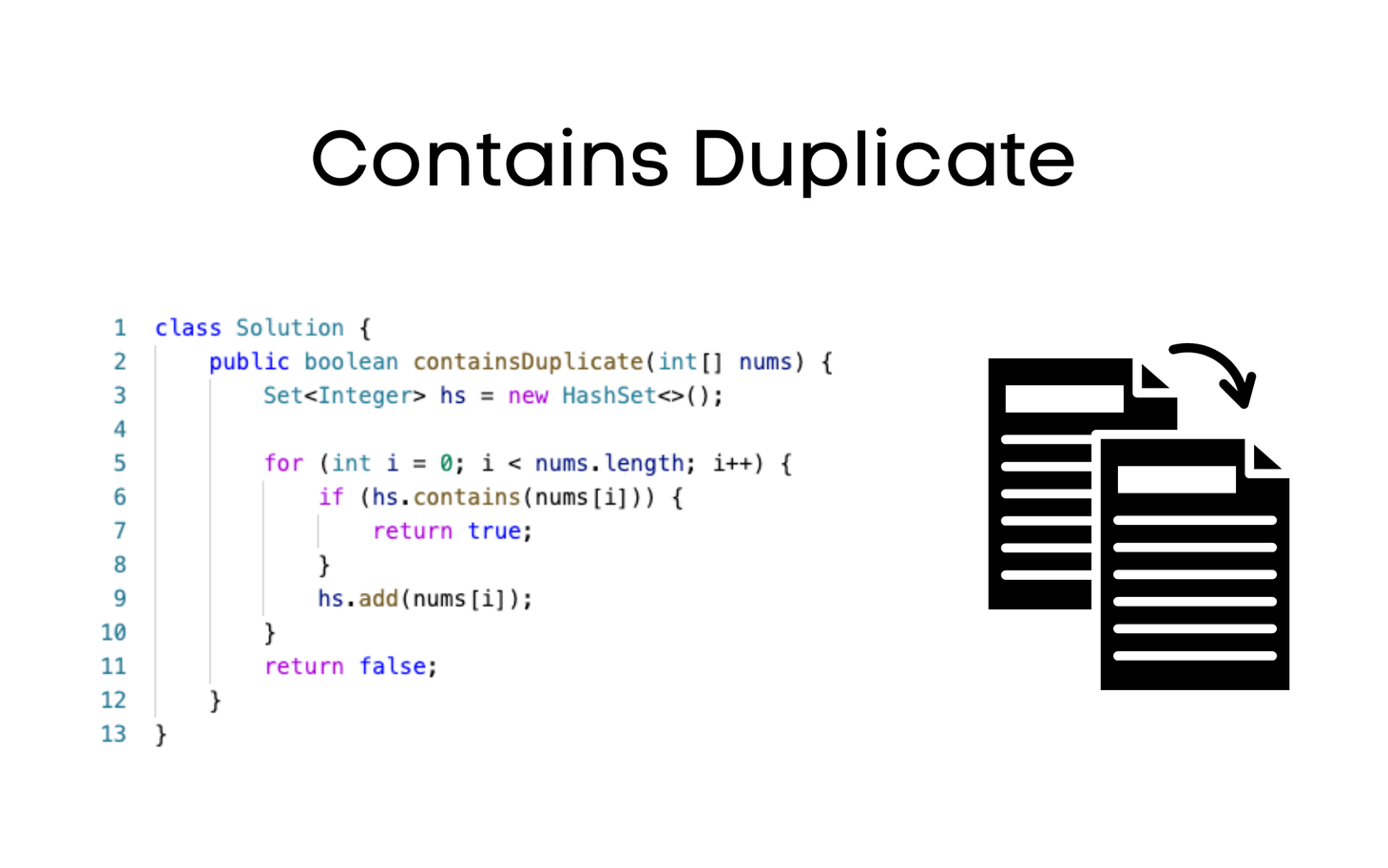

- Scalability: The scalability of an algorithm refers to its ability to handle larger input sizes efficiently. The choice of a scalable data structure enables the algorithm to maintain its efficiency as the data volume grows. For example, a hash table for quick lookups can be more scalable than a linear search through an array.

- Memory Efficiency: Data structures have different memory requirements. Choosing a data structure that optimizes memory usage can be crucial, especially in resource-constrained environments. This helps minimize memory overhead and allows efficient utilization of available resources.

- Maintainability and Readability: Choosing an appropriate data structure improves the code's readability and maintainability. When the data structure aligns with the problem's nature, the code becomes more intuitive and easier for both the original developer and other team members to understand and maintain.

In summary, selecting the right data structure is vital as it impacts the algorithm's efficiency, functionality, scalability, memory utilization, and code maintainability.

It allows optimal performance and ensures the algorithm can effectively handle the problem.

Types of complexities

In computer science, several commonly encountered types of Big-O complexity analysis help classify the efficiency and scalability of algorithms. Some of the prominent types include:

- Constant Time Complexity:

O(1)- The algorithm's performance remains constant regardless of the input size. It indicates that the execution time and resource usage do not depend on the input. - Logarithmic Time Complexity:

O(log n)- The algorithm's performance grows logarithmically with the input size. Examples include binary search or operations on balanced trees. - Linear Time Complexity:

O(n)- The algorithm's performance increases linearly with the input size. It implies that the execution time and resource usage are directly proportional to the input. - Linearithmic Time Complexity:

O(n log n)- The algorithm's performance grows linear and logarithmic patterns. It is commonly seen in efficient sorting algorithms like merge sort or quicksort. - Quadratic Time Complexity:

O(n2)- The algorithm's performance grows quadratically with the input size. It indicates that the execution time and resource usage increase exponentially as the input grows. - Exponential Time Complexity:

O(2n)- The algorithm's performance grows exponentially with the input size. It implies that the execution time and resource usage increase rapidly, making it inefficient for large inputs. - Factorial Time Complexity:

O(n!)- The algorithm's performance grows factorially with the input size. It represents the worst-case scenario where the execution time and resource usage become excessively large, making it highly inefficient.

These are just a few examples of commonly encountered Big-O complexity analysis types. Understanding these notations helps programmers assess the efficiency and scalability of algorithms, enabling them to make informed decisions about algorithm selection, optimization, and resource allocation.

In the next lesson, you will learn each in detail w.r.t time and space complexities.

Gopi Gorantala Newsletter

Join the newsletter to receive the latest updates in your inbox.